Nvidia H100 | A New Revolution to GPU’s Artificial Intelligence

Nvidia H100 is an advanced-level professional graphic card manufactured by Nvidia to substantially improve its GPUs’ AI performance. The chipset was launched back in the first quarter of 2023 and brought a quantum leap in GPU performance. The GPU contains 80 billion transistors, making it currently one of the best graphic cards to handle large amounts of data. The chipset has revolutionized artificial intelligence and is extensively used in advanced self-driving cars, medical diagnosis systems, and other systems that heavily depend on AI-based performance. Thus, regarding high-performing Graphic processing units, H100 is one of the widely used chipsets. This blog provides everything about the H100 NVidia GPU.

What makes H100 Nvidia chipset so Important for Newer Graphic Cards?

NVIDIA H100 chipset has become important for newer graphic cards because of its advanced artificial intelligence algorithm. The Chipset has powerful computing capabilities to train efficiently on large language models. It helps machines interpret data in a more human-like way by generating text and translating the language. The Chipset is in high demand due to its accelerated AI performance. Many IT businesses rely on this Chipset to offer better performance in their AI applications.

Avail First Order Discount Now!

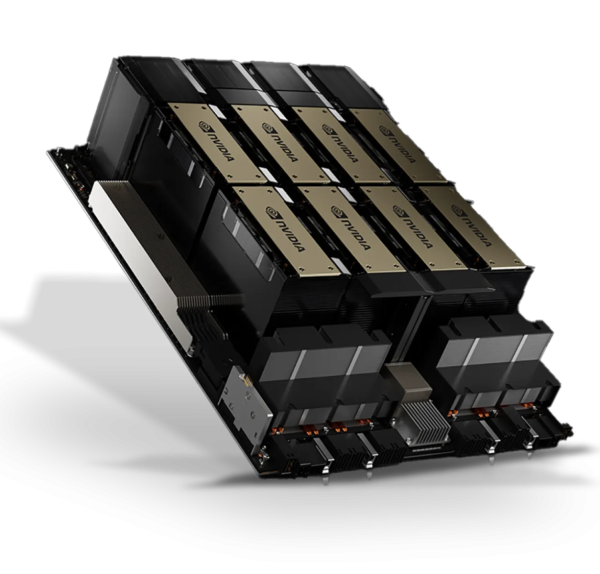

Nvidia H100

Why is the H100 Chipset so Important for AI?

Nvidia H100 is the highly specialized GPU made to train well with generative AI. The reason why the GPU is so much in demand is because it can drastically help in improving next-generation AI modules such as Meta, OpenAI, and Stability AI, as mentioned in Nvidia news shared on the official website. The H100 is a future-proof chipset designed specifically to increase the AI performance of advanced systems, like self-driving cars, medical diagnosis systems, live and open environment game plays, and other AI-powered applications.

Applications, systems, and software based on artificial intelligence require an extremely huge amount of processing power. The self-evolving AI solutions that are trained on large language models, such as translators and chatbots that live-communicate with the users, require more powerful computing capabilities. Before the launch of H100, many AI software-making companies had been relying on A100, the most powerful processor back then. Nvidia is setting a new record of advanced specs with the launch of the H100, making it the most in-demand chipset for tech giants associating with AI technology. Many well-known AI giants, like Meta, Open AI, Stability AI, Twelve Labs, have been using H100 and its predecessor in their applications.

OpenAI Adopted Nvidia H100

OpenAI is one of the pioneers that adopted Nvidia H100 and its predecessor A100 to offer an immersive AI experience. According to Nvidia’s official press release published on the website, OpenAI leverages Nvidia’s A100 processor chip to train and run the large language model of ChatGPT. OpenAI is a system that has been used by millions of people around the world, taking live commands and performing results in the form of text, images, and audio. It is said that OpenAI will be using Nvidia H100 to further improve its AI research for its Azure supercomputer.

Meta AI Adopted Nvidia H100

Nvidia’s Hopper Architecture has been a part of Meta’s AI program. Its AI supercomputer Grand Teton system was adopted in Meta’s data centers for enhancement of meta deep learning recommender models and content understanding. Hopper Architecture allowed Meta to increase its host-to-GPU bandwidth by 4 times, double the computing capabilities, and improve the data network.

Nvidia H100 vs A100

Nvidia A100 is a predecessor of the H100 GPU. Here is the key feature difference between the both models.

| Features | H100 Nvidia | A100 Nvidia |

| Release Date | March 21st, 2023 | May 14th, 2021 |

| Architecture | Hopper | Amphere |

| CUDA Core | 16,896 (PCIe) / 18,432 (SXM) | 6,912 (PCIe) / 6,912 (SXM) |

| Memory Type | HMB3 | HMB2e |

| Tensor Core | 4th Generation | 3rd Generation |

| Software Eco-System | CUDA 12x, CuDNN, TensorRT, Nvidia AI Enterprise, RAPIDS | CUDA11x, cuDNN, TensorRT, RAPIDS. |

| Memory Type | HMB3 | HBM2e |

| Memory Bandwidth | Up to 3TB/s | 1.6 TB/s |

| Required Power | 700W (SXM), 350W (PCIe) | 400W (SXM), 350 (PCIe) |

| Memory Capacity | 80 GBs | 40 GBs |

| Peak FP Performance | Up to 1.97 Petaflops | Not Available |

| Transformer Engine | Available | Not Available |

| Process Size | 5nm | 7nm |

| Transistors | 80,000 million | 54,200 million |

| Bus Interface | PCIe 5.0 x16 | PCIe 4.0 x16 |

| Die Size | 814 mm² | 826 mm² |

| TDP | 350W | 300W |

| Power Connector | 8-pin EPS | 1x 16-pin |

| Dimension | 10.6 x 4.4 Inches | 10.5 x 4.4 Inches |

| Level 1 Cache | 256 KB (per SM) | 192 KB (per SM) |

| Level 2 Cache | 50 MB | 80 MB |

| Pixel rate | 42.12 GPixel/s | 225.6 GPixel/s |

| Texture Rate | 800.3 GTexel/s | 609.1 GTexel/s |

| FP16 (half) | 204.9 TFLOPS (4:1) | 77.97 TFLOPS (4:1) |

| FP32 (float) | 51.22 TFLOPS | 19.49 TFLOPS |

| FP64 (double) | 25.61 TFLOPS (1:2) | 9.746 TFLOPS (1:2) |

H100 Nvidia Graphics Card Specifications

The graphic card is built with TSMC’s 4 nm process, which significantly increases performance and efficiency. Though Nvidia’s GPU model is built with a focus on providing ultra-fine graphics, gh100 focuses on both graphic capabilities and machine learning capabilities. Here are the detailed specifications discussed:

Hopper Architecture

Nvidia Hopper Architecture is a specially designed GPU architecture that can effectively meet the demands of advanced AI models and high-performance computing. Though it is built on the previous ampere, it offers significant computing advancement and advanced Artificial intelligence to support data-intensive workloads. The new hopper microarchitecture comes with unarguably amazing features, such as a transformer engine, fourth-generation tensor core, fourth-generation NVLink, third-generation NV Switch, and confidential computing of big data to offer more security. Let’s learn more about H100 Nvidia’s GPU Architecture.

4th Generation Tensor Cores

The tensor cores are the foundation of a deep learning AI model, designed by NVidia to perform matrix multiplication and accumulation operations. The fourth-generation tensor core is the new advancement in Nvidia’s hopper architecture. These tensor cores can offer more precision in computing with its FP8 Floating point 8-bit that can achieve up to 1.97 petaflops of AI performance. This can double the existing speed of FPS16 in workload.

Transformer Engine

The Transformer engine is another upgraded feature in Hopper Architecture. The transformer is capable of working on large language models. The transformer engine can automatically adjust speed and accuracy with the precision of FPS8, FP16, and FP32. These transformers are trained to apply a mixed algorithm of FPS8 & FPS16 that helps in improving image recognition and faster and more precise object detection. The advanced results in generative AI Models depend a lot on the transformer engine.

Dynamic Programming Accelerator (DPX)

The new Hopper Architecture came with the feature of dynamic programming. It is capable of solving complex problems, breaking them into small problems, and sorting them by integrating DPX instructions. The H100 GPU enables faster execution of dynamic programming tasks. The integrated DPX is another attractive feature that increases demand for H100.

Memory and Bandwidth

The new h100 GPU came with High memory-bandwidth 3, which is a significant upgrade over HMB2e. It can support up to 80GB of HMB3 memory, making a substantial upgrade from the previous 40GB in the previous model. The GPU has a highly optimized base clock speed of 1095 Hz that can be boosted up to 1755 MHz, making it highly efficient in speedy computing, frame rendering, and parallel task execution. In GH100, the memory clock can run up to 1593 MHz, striking the right balance between capacity, speed, and data storage. It can perfectly support large-scale AI datasets and HPC workloads.

Multi-Instance Graphic Processing Unit

The New Nvidia H100 GPU comes with a multi-instance graphic processing unit that breaks down the fully isolated instances with their own memory, cache and computing cores. The newest feature of this hopper architecture is that it can support up to multi-tenant and multi-user configurations in a virtualized environment. It can make up to seven GPU instances, each completely confidential from the other.

Advance Cache Architecture

Nvidia’s H100 Graphic card is based on advanced cache architecture for Level 2 and Level 1 and shared memory. Level 2 cache creates a memory pool shared with all streaming multi-processors available in the h100 architecture. H 100 Nvidia has greater L2 Cache capacity than previous models, significantly reducing latency, optimizing data flow, and enhancing GPU performance. Cache architecture works with memory bus to improve overall bandwidth and AI performance.

Scalability of Architecture for Advanced Networking Platform

An NVIDIA HGX H100 Delta is the perfect choice for enterprises where massive data sets and complex simulations are processed with high-speed interconnection. It is a set of 8 Nvidia h100s that can be used together to create an Accelerated Computing Platform. It is capable of providing 640GB memory support, collaboratively utilizing both HGX h200 AND HGX H100 for the highest AI performance, and enabling cloud networking. NVIDIA HGX Delta offers eight times faster bandwidth to interpret, store, and process data in a super-computing platform.

Want to adopt these architectural advances with Nvidia HGX Delta-Next? Get a 640GB graphic suite at discounted prices at Direct Macro!

Avail First Order Discount Now!

NVIDIA HGX H100 Delta

When is the NVIDIA HGX H100 Delta your best choice?

It’s a massive unified GPU cluster that helps create a supercomputing platform for large enterprises. It helps in the following ways:

- Scaling up application performance for accelerated Computing Platform

- Performing thousands of AI simultaneously with greater accuracy at every scale

- Enabling advanced cloud multi-tenancy and zero-trust security.

- Creating the world’s leading AI computing platform.

Nvidia H100 GPU Specifications

Graphic Card Architecture:

| GPU | H100 |

| Architecture | Hopper |

| Process technology | 4nm TSMC |

| Die size | 814 nm2 |

| Transistor Count | 80,000 million |

| DPX Dynamic Programming Accelerator | Available |

| Tensor Cores | 4rth Generation |

| Bus interface | PCIe 5.0×16 |

| Streaming Multiprocessor | 168 |

| CUDA Core | 14,832 |

| Transformer Engine | Available |

| Software System Support | cuDNN, RAPIDS, TensorRT, Nvidia AI Enterprise |

| Thermal Design Power | Upto 700W |

| Cooling Options | Passive Cooling for SXM and PCIe Versions |

| Predecessor | Tesla Ada Architecture |

| Successor | Blackwell Architecture |

| Release date | Oct 2023 |

| Nvidia h100 price | $26,950 at Direct Macro |

Memory and Bandwidth:

| Feature | Specification |

| Memory Type | HMB3 |

| Capacity | 80GB |

| Memory Bus | 5120 Bits |

| Bandwidth | 2.04 TB/S |

| Peak AI Performance | Up to 1.97 petaflops (FP8) |

| Clock speeds | 1095 MHz (boostable up to 1755MHz) |

| Memory clock | 1593 MHz |

Interconnectivity and Scalability:

| Feature | Specification |

| SXM Version | NVlink 4.0 |

| PCIe Version | PCIe Gen 5.0 |

| Multi-Instance GPU | Supports 7 Instance |

H100 GPU available at best price at Direct Macro

Just like its advanced features, the NVidia h100 price is often charged at a premium. This GPU is so expensive compared to others in the same range because of its unmatched performance, premium value, and limited production capacity on the manufacturer’s end. Though the demand is high and the production is still active, you will find Nvidia h100 prices ranging from 26000$ to 30,000$ with varying discounts from varying retailers.

Want a GH100 GPU at an unbeatable price? Direct Macro has fantastic deals and discounts for its customers. Get the high-performance Nvidia gh100 GPU now. Whether you need a single unit or bulk purchase, we have covered you on all grounds. Get free delivery throughout the United States and Canada without a 30-day refund and exchange policy. Place your order now on our website!

Nvidia Tesla H100 Graphic Card Price on Direct Macro: $26,950

NVIDIA H100 Hopper PCIe 80GB Price on Amazon: 28,029.99

Tesla H100 80GB NVIDIA Price on Ebay: 32,107.75

Conclusion:

Are you planning to upgrade your system’s graphic architecture? Want to leverage advanced artificial intelligence for better outcomes? Nvidia H100 is the perfect graphic processing unit for you. This blog shares everything you need to know before buying the NVIDIA H100 at the best market price.

FAQ’s:

How much does NVidia h100 cost?

H100 Nvidia GPU costs anywhere from $27,000 to $30,000 in the market. Different retailers are offering various discounts. You can get the Nvidia H100 GPU at the cheapest price of $26,950 at Direct Macro.

Why H100 Nvidia chip is so high in demand?

Nvidia H100 brings out a monumental leap in GPU performance in terms of high-performing Computing, advanced memory cache, highly efficient generative AI models, and more attention-grabbing, unprecedented features.

What is the useful life of h100?

The average lifespan of an Nvidia h100 is around five years. However, it can extend this lifespan depending on the usage and environment.

Do you need advice on buying or selling hardware? Fill out the form and we will return.

Sales & Support

(855) 483-7810

We respond within 48 hours on all weekdays

Opening hours

Monday to thursday: 08.30-16.30

Friday: 08.30-15.30